Design patterns for the securing of LLM agents

Design Patterns for Securing LLM Agents against Prompt Injections by Luca Beurer-Kellner et al. describes a series of design patterns for LLM agents that significantly minimise the risk of prompt injections. They restrict the actions of agents in order to prevent them from solving arbitrary tasks. For us, these design patterns represent a good compromise between agent utility and security.

This new paper provides a solid explanation of prompt injections and then proposes six design patterns to protect against them:

- action-selector-pattern

- plan-then-execute-pattern

- llm-map-reduce-pattern

- dual-llm-pattern

- code-then-execute-pattern

- context-minimization-pattern

The problem area

The authors of the paper describe the scope of the problem as follows:

‘As long as both agents and their defenses rely on the current class of language models, we believe it is unlikely that general-purpose agents can provide meaningful and reliable safety guarantees.

This leads to a more productive question: what kinds of agents can we build today that produce useful work while offering resistance to prompt injection attacks? In this section, we introduce a set of design patterns for LLM agents that aim to mitigate — if not entirely eliminate – the risk of prompt injection attacks. These patterns impose intentional constraints on agents, explicitly limiting their ability to perform arbitrary tasks.’

In other words, the authors do not have a patent solution against prompt injection, so they offer realistic compromises, namely restricting the ability of agents to perform arbitrary tasks. This may not seem satisfactory, but it is all the more credible.

‘The design patterns we propose share a common guiding principle: once an LLM agent has ingested untrusted input, it must be constrained so that it is impossible for that input to trigger any consequential actions—that is, actions with negative side effects on the system or its environment. At a minimum, this means that restricted agents must not be able to invoke tools that can break the integrity or confidentiality of the system. Furthermore, their outputs should not pose downstream risks – such as exfiltrating sensitive information (e.g., via embedded links) or manipulating future agent behavior (e.g., harmful responses to a user query).’

Any contact with a potentially malicious token can therefore completely falsify the output for this prompt. This means that an attack via an infiltrated token can gain complete control over what happens next. This means that not only the text output of the LLM can be taken over, but also all tool calls that the LLM can potentially trigger.

Let’s now take a closer look at their design patterns.

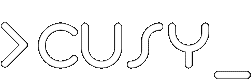

Action-Selector Pattern

‘A relatively simple pattern that makes agents immune to prompt injections – while still allowing them to take external actions – is to prevent any feedback from these actions back into the agent.’

The red colour stands for untrusted data. The LLM translates here between a request in natural language and a series of predefined actions to be performed on untrusted data.

Agents can trigger tools, but they cannot be confronted with or react to the responses of these tools. They cannot read an email or retrieve a web page, but they can trigger actions such as ‘send the user to this web page’ or ‘show the user this message’.

The authors summarise this pattern as an LLM-modulated switch statement.

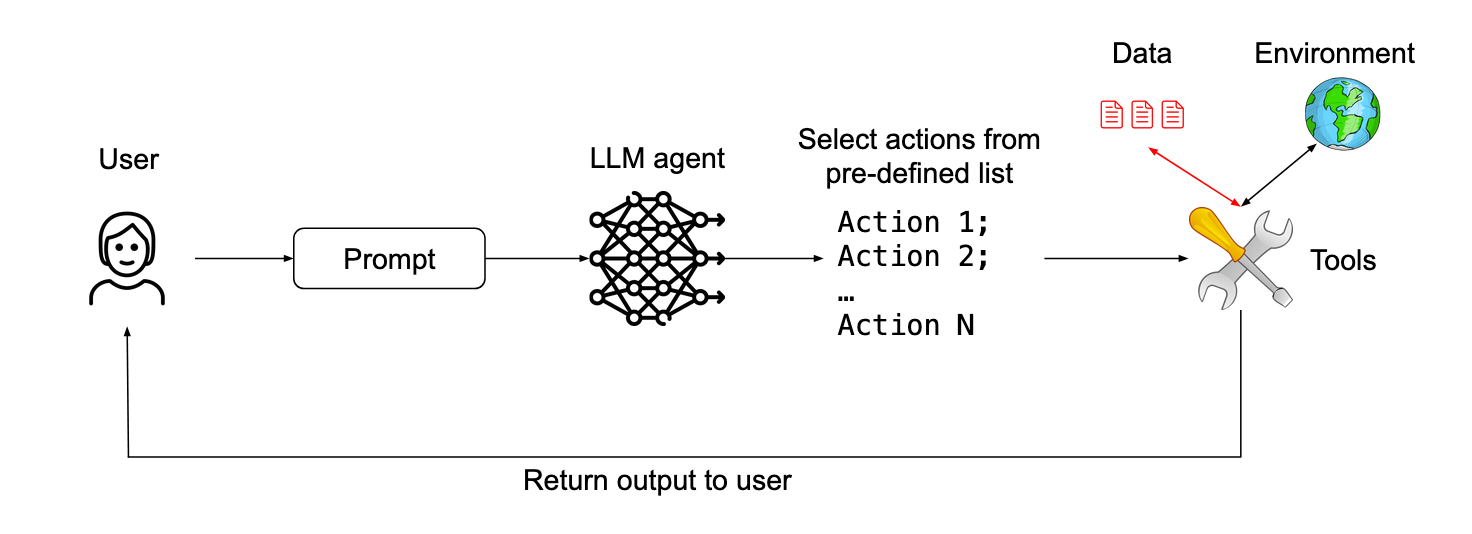

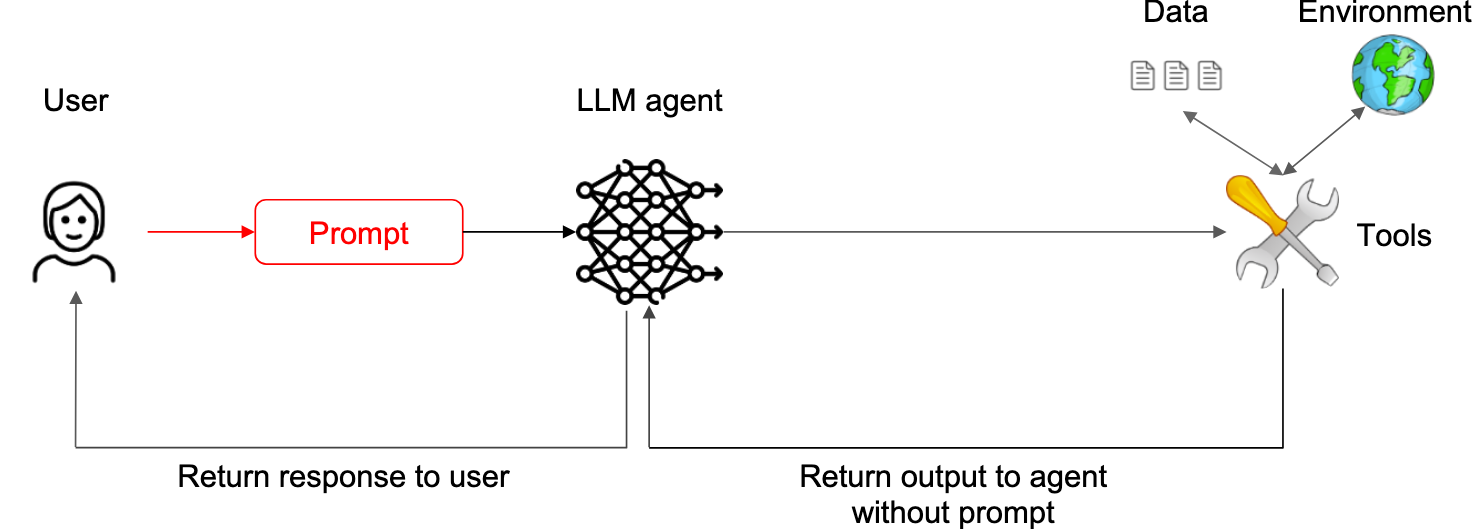

Plan-Then-Execute Pattern

‘A more permissive approach is to allow feedback from tool outputs back to the agent, but to prevent the tool outputs from influencing the choice of actions taken by the agent.’

Before processing untrusted data, the LLM defines a plan consisting of a series of permissible tool calls. A prompt injection cannot then force the LLM to execute a tool that is not part of the defined plan.

The idea here is to plan the tool calls in advance before contact with untrusted content occurs. This enables sophisticated sequences of actions without the risk of one of these actions containing malicious instructions that later trigger unplanned malicious actions.

In their example, Send today’s schedule to my boss John Doe in a calendar.read() followed by an email.write(…, "john.doe@company.com") call. The output of calendar.read() may corrupt the text of the email sent, but it cannot change the recipient of that email.

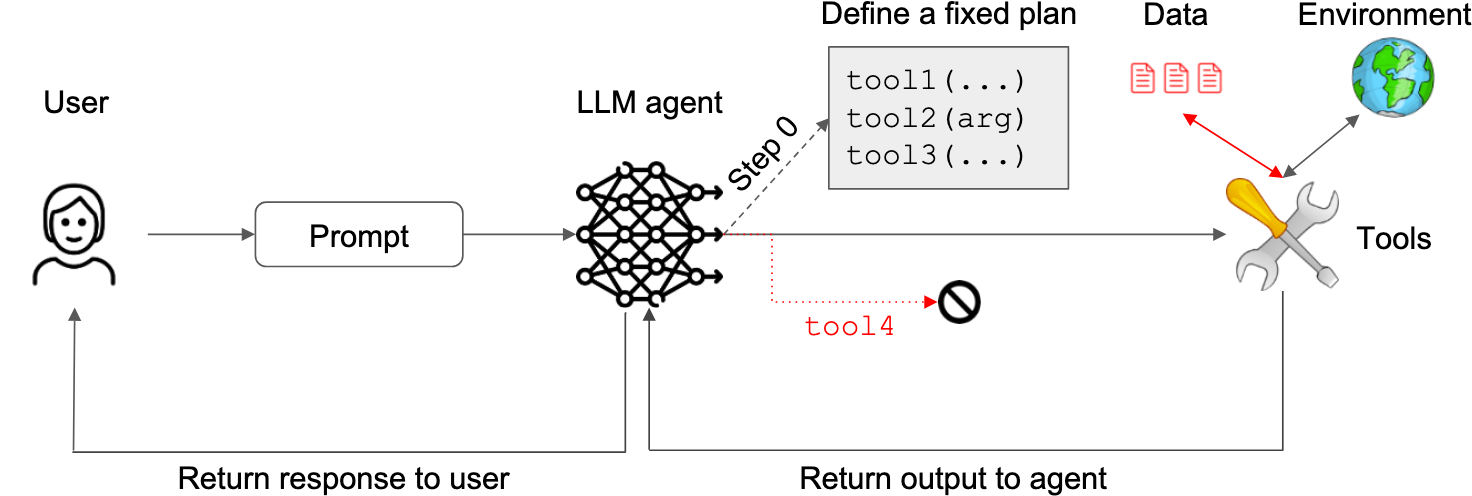

LLM Map-Reduce Pattern

The previous pattern allowed malicious instructions to influence the content sent to the next step. The map-reduce pattern includes sub-agents that are instructed to be confronted with untrusted content and whose results are later securely aggregated.

Untrusted documents are processed independently to ensure that a malicious document cannot affect the processing of another document.

In their example, an agent is asked to find files containing this month’s invoices and send them to the accounting department. Each file is processed by a subagent, which responds with a Boolean value indicating whether the file is relevant or not. Files that have been classified as relevant are then summarised and sent.

Dual LLM Pattern

In this pattern, a privileged LLM coordinates a quarantined LLM to avoid any disclosure of untrusted content. The quarantined LLM returns symbolic variables – for example $VAR1, which represents a summarised web page and which the privileged LLM can request to be displayed to the user without being exposed to the suspicious content itself.

See also

The Dual LLM pattern for building AI assistants that can resist prompt injection by Simon Willison.

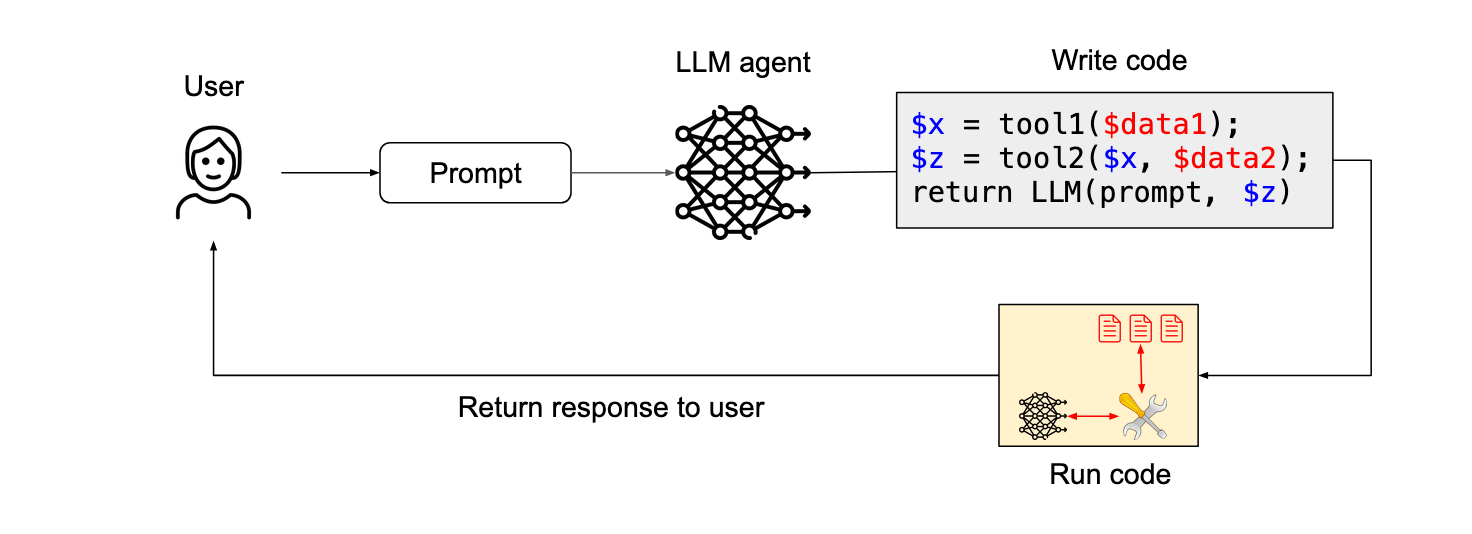

Code-Then-Execute Pattern

This pattern is described in the CaMeL paper from DeepMind: Defeating Prompt Injections by Design. It is an improved version of the dual LLM pattern, where the privileged LLM code is generated in a user-defined sandbox DSL (Domain Specific Language) that specifies which tools should be called and how their outputs should be passed to each other.

The DSL is designed to allow full data flow analysis so that any corrupted data can be flagged as such and traced throughout the process.

The LLM writes a code that can call tools and call other LLMs. The code is then executed on untrusted data.

Context-Minimization pattern

‘The safest design we can consider here is one where the code agent only interacts with untrusted documentation or code by means of a strictly formatted interface (e. g., instead of seeing arbitrary code or documentation, the agent only sees a formal API description). This can be achieved by processing untrusted data with a quarantined LLM that is instructed to convert the data into an API description with strict formatting requirements to minimize the risk of prompt injections (e.g., method names limited to 30 characters).

- Utility

- Utility is reduced because the agent can only see APIs and no natural language descriptions or examples of third-party code.

- Security

- Prompt injections would have to survive being formatted into an API description, which is unlikely if the formatting requirements are strict enough.’

The user’s prompt informs the LLM agent’s actions (for example calling a specific tool), but is then removed from the context of the LLM to prevent it from changing the LLM’s response.

Note

As much as the minimisation of the context is in line with our experience, we doubt that the reduction of the method name to up to 30 characters can increase security – creative attacks could easily fulfil this requirement and still cause damage.

Recommendations

Even if securing universal agents remains unattainable with the current possibilities, application-specific agents can be secured through principled system design. The design patterns proposed by the authors make AI agents more resistant to prompt injection attacks. They have deliberately kept the patterns simple to make them easier to understand. They make the following two recommendations for developers and decision-makers:

- Prioritise the development of application-specific agents that adhere to secure design patterns and define clear trust boundaries.

- Use a combination of design patterns to achieve robust security: no single pattern is likely to be sufficient for all threat models or use cases.

In addition to the design patterns presented, there are some general best practices that should ideally always be considered when designing an AI agent. These relate to the conservative handling of model privileges, user authorisations and confirmations. In addition, sandboxing makes it possible to define minimal authorisations and fine-grained authorisations for each action. Best security practices still apply and should not be forgotten when focussing on securing the AI component.

Summary

Prompt injections continue to be one of the biggest challenges to the responsible use of agent-based systems that many are so keen to build. Fortunately, the research community’s attention to these problems is increasing.