Agile development & DevOps

How better developer experience increases productivity

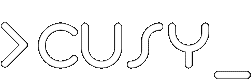

According to State of DevOps 2021, in 78 percent of organisations, DevOps implementation is stalled at a medium level. This percentage has remained almost the same for 4 years.

The vast majority of companies are stuck in mid-level DevOps development. The study divided the mid-level into three categories to explore the phenomenon in more detail.

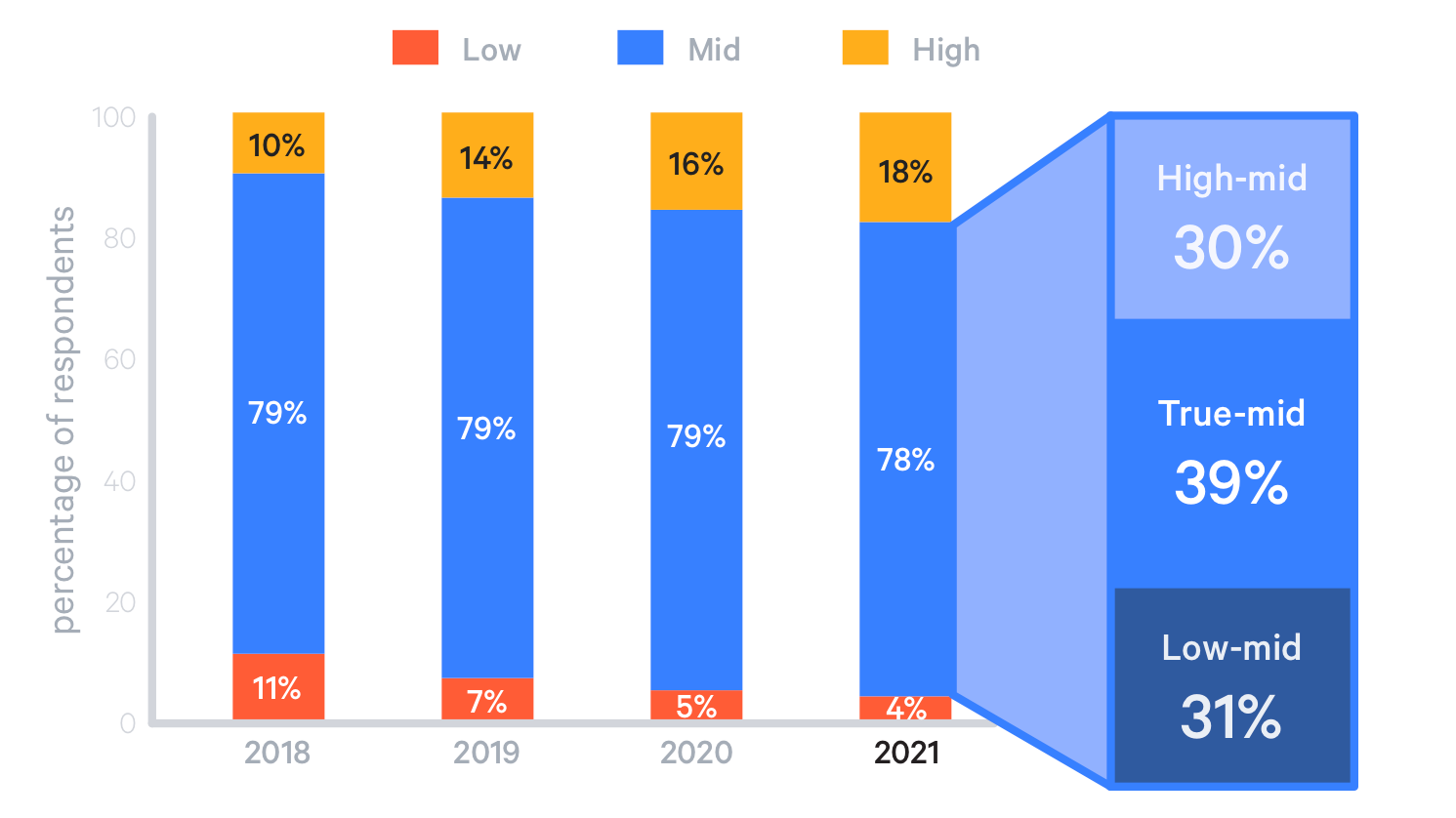

Unsurprisingly, companies that are already well advanced in DevOps implementation have also automated the most processes. These companies also have the most top-down support for the bottom-up change (→ Top-down and bottom-up design):

Less than 2 percent of employees at highly developed companies report resistance to DevOps at the executive level, and the figure is also only 3 percent at medium-developed companies. However, only 30 percent report that DevOps is actively promoted, compared to 60 percent of highly developed companies.

A definition of DevOps suggests the reasons why many companies are stuck in implementation:

DevOps is the philosophy of unifying development and operations at the levels of culture, practice and tools to achieve faster and more frequent deployment of changes to production.

– Rob England: Define DevOps: What is DevOps?, 2014

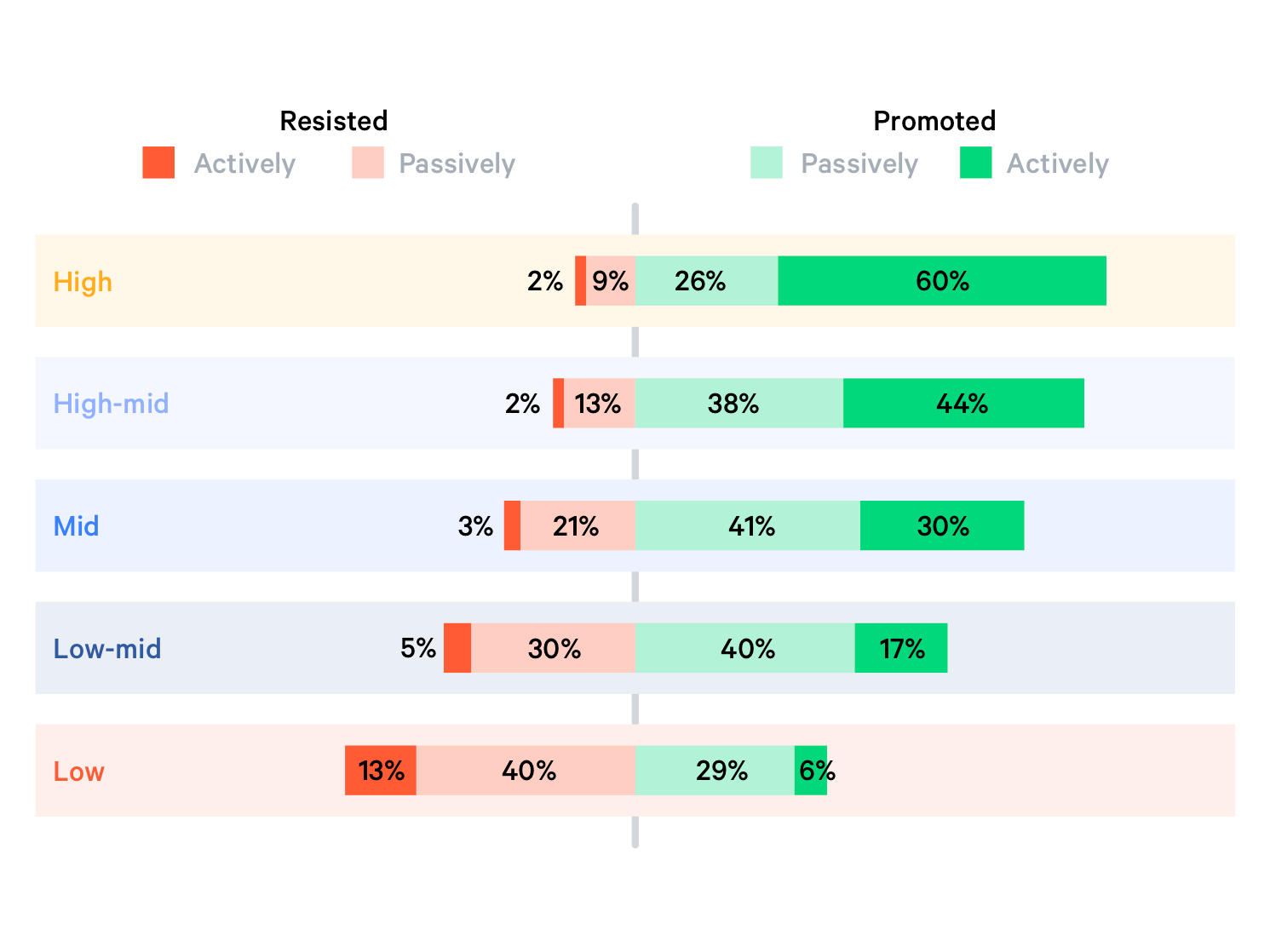

A key aspect of DevOps culture is shared ownership of the system. It is easy for a development team to lose interest in operations and maintenance when it is handed over to another team to run. However, when the development team shares responsibility for the operation of a system throughout its lifecycle, it can better understand the problems faced by the operations team and also find ways to simplify deployment and maintenance, for example by automating deployments (→ Release, → Deploy) and improving monitoring (→ Metrics, → Incident Management, → Status page, ). Conversely, when the operations team shares responsibility for a system, they work more closely with the development team, which then has a better understanding of operational requirements.

DevOps changes

Organisational changes are needed to support a culture of shared responsibility. Development and operations shouldn’t be silos. Those responsible for operations should be involved in the development team at an early stage. Collaboration between development and operations helps with cooperation. Handoffs and releases, on the other hand, discourage, hinder shared responsibility, and encourage finger-pointing.

To work together effectively, the development and operations teams must be able to make decisions and changes. This requires trust in these autonomous teams, because only then will their approach to risk change and an environment be created that is free from fear of failure.

Feedback is essential to foster collaboration between the development team and operations staff and to continuously improve the system itself. The type of feedback can vary greatly in duration and frequency:

- Reviewing one’s own code changes should be very frequent and therefore only take a few seconds

- However, checking whether a component can be integrated with all others can take several hours and will therefore be done much less frequently.

- Checking non-functional requirements such as timing, resource consumption, maintainability etc. can also take a whole day.

- The team’s response to a technical question should not take hours or even weeks.

- The time until new team members become productive can take weeks or even months.

- Until a new service can become productive should be possible within a few days.

- However, finding errors in productive systems is often only successful after one or more days.

- A first confirmation by the target group that a change in production is accepted should be possible after a few weeks.

It should be self-explanatory that feedback loops are carried out more often when they are shorter. Early and frequent validation will also reduce later analysis and rework. Feedback loops that are easy to interpret also reduce effort significantly.

Etsy not only records whether the availability and error rate of components meet its own requirements, but also to what extent a functional change is accepted by the target group: if a change turns out to be worthless, it is removed again, thus reducing technical debt.

Developer Experience

Developer Experience (DX), which applies concepts from UX optimisation to experiences in development teams, can be a good addition here to better capture the cultural level from the development team’s perspective. In Developer Experience: Concept and Definition Fabian Fagerholm and Jürgen Münch distinguish the following three aspects:

| Aspect | Description | Topics |

|---|---|---|

| cognition | How do developers perceive the development infrastructure? |

|

| affect | How do developers feel about their work? |

|

| conation | How do developers see the value of their contribution? |

|

This is what the day might look like for members of the development team in a less effective environment:

- The day starts with a series of problems in production.

- Since there are no aggregated evaluations, the error is searched for in various log files and monitoring services.

- Finally, the problem is suspected to be swapping out memory to a swap file and the operations team is asked for more memory.

- Now it’s finally time to see if there was any feedback from the QA team on the newly implemented function.

- Then it’s already the first of several status meetings.

- Finally, development could begin, if only access to the necessary API had already been provided. Instead, phone calls are now being made to the team that provides access to the API so that we can’t start work for several days.

We could list many more blockages; in the end, the members of the development team end their working day frustrated and demotivated. Everything takes longer than it should and the day consists of never-ending obstacles and bureaucracy. Not only do development team members feel ineffective and unproductive. When they eventually accept this way of working, learned helplessness spreads among them.

In such an environment, companies usually try to measure productivity and identify the lowest performing team members by measuring the number of code changes and tickets successfully processed. In such companies, in my experience, the best professionals will leave; there is no reason for them to remain in an ineffective environment when innovative digital companies are looking for strong technical talents.

The working day in a highly effective work environment, on the other hand, might look like this:

- The day starts by looking at the team’s project management tool, e.g. a so-called Kanban board, then there is a short standup meeting after each team member is clear on what they will be working on today.

- The team member notes that the development environment has automatically received up-to-date libraries, which have also already been transferred to production as all CI/CD pipelines were green.

- Next, the changes are applied to their own development environment and the first incremental code changes are started, which can be quickly validated through unit testing.

- The next feature requires access to the API of a service for which another team is responsible. Access can be requested via a central service portal, and questions that arise are quickly answered in a chat room.

- Now the new feature can be worked on intensively for several hours without interruption.

- After the feature has been implemented and all component tests have been passed, automated tests check whether the component continues to integrate with all other components.

- Once all Continuous Integration pipelines are green, the changes are gradually released to the intended target groups in production while monitoring operational metrics.

Each member of such a development team can make incremental progress in a day and end the workday satisfied with what has been achieved. In such a work environment, work objectives can be achieved easily and efficiently and there is little friction. Team members spend most of their time creating something valuable. Being productive motivates development team members. Without friction, hey have time to think creatively and apply themselves. This is where companies focus on providing an effective technical environment.

Get in touch

We will be happy to answer your questions and create a customised offer for the DevOps transformation.

I also like to call you back!

Software reviews – does high-quality software justify its cost?

What are the benefits of software reviews?

Software reviews lead to significantly fewer errors. According to a study of 12 thousand projects by Capers Jones [1], these are reduced by

- in requirements reviews by 20–50 percent

- in top-level design reviews by 30–60 percent

- in detailed functional design reviews by 30–65 percent

- for detailed logical design reviews by 35–75percent

The study concludes:

Poor code quality is cheaper until the end of the code phase; after that, high quality is cheaper.

The study still assumes a classic waterfall model from requirements to software architecture, first to code, then to tests and operation. In agile software development, however, this process is repeated in many cycles, so that we spend most of our time developing software by changing or adding to the existing code base. Therefore, we need to understand not only the change requirements but also the existing code. Better code quality makes it easier for us to understand what our application does and how the changes make sense. If code is well divided into separate modules, I can get an overview much faster than with a large monolithic code base. Further, if the code is clearly named, I can more quickly understand what different parts of the code do without having to go into detail. The faster I understand the code and can implement the desired change, the less time I need to implement it. To make matters worse, the likelihood that I will make a mistake increases. More time is lost in finding and fixing such errors. This additional time is usually accounted for as technical debt.

Conversely, I may be able to find a quick way to provide a desired function, but one that goes beyond the existing module boundaries. Such a quick and dirty implementation, however, makes further development difficult in the weeks and months to come. Even in agile software development without adequate code quality, progress can only be fast at the beginning, the longer there is no review, the tougher the further development will be. In many discussions with experienced colleagues, the assessment was that regular reviews and refactorings lead to higher productivity after just a few weeks.

Which types of review solve which problems?

There are different ways to conduct a review. These depend on the time and the goals of the review.

The IEEE 1028 standard [2] defines the following five types of review:

- Walkthroughs

- With this static analysis technique we develop scenarios and do test runs, e.g. to find anomalies in the requirements and alternative implementation options. They help us to better understand the problem, but do not necessarily lead to a decision.

- Technical reviews

- We carry out these technical reviews, e.g. to evaluate alternative software architectures in discussions, to find errors or to solve technical problems and come to a (consensus) decision.

- Inspections

- We use this formal review technique, for example, to quickly find contradictions in the requirements, incorrect module assignments, similar functions, etc. and to be able to eliminate these as early as possible. We often carry out such inspections during pair programming, which also provides less experienced developers with quick and practical training.

- Audits

- Often, before a customer’s software is put into operation, we conduct an evaluation of their software product with regard to criteria such as walk-through reports, software architecture, code and security analysis as well as test procedures.

- Management reviews

- We use this systematic evaluation of development or procurement processes to obtain an overview of the project’s progress and to compare it with any schedules.

Customer testimonials

«Thank you very much for the good work. We are very happy with the result!»

– Niklas Kohlgraf, Projektmanagement, pooliestudios GmbH

Get in touch

I would be pleased to answer your questions and create a suitable offer for our software reviews.

We will also be happy to call you!

| [1] | Capers Jones: Software Quality in 2002: A Survey of the State of the Art |

| [2] | IEEE Standard for Software Reviews and Audits 1028–2008 |

Migration from Jenkins to GitLab CI/CD

Our experience is that migrations are often postponed for a very long time because they do not promise any immediate advantage. However, when the tools used are getting on in years and no longer really fit the new requirements, technical debts accumulate that also jeopardise further development.

Advantages

The advantages of GitLab CI/CD over Jenkins are:

- Seamless integration

- GitLab provides a complete DevOps workflow that seamlessly integrates with the GitLab ecosystem.

- Better visibility

- Better integration also leads to greater visibility across pipelines and projects, allowing teams to stay focused.

- Lower cost of ownership

- Jenkins requires significant effort in maintenance and configuration. GitLab, on the other hand, provides code review and CI/CD in a single application.

Getting started

Migrating from Jenkins to GitLab doesn’t have to be scary though. Many projects have already been switched from Jenkins to GitLab CI/CD, and there are quite a few tools available to ease the transition, such as:

Run Jenkins files in GitLab CI/CD.

A short-term solution that teams can use when migrating from Jenkins to GitLab CI/CD is to use Docker to run a Jenkins file in GitLab CI/CD while gradually updating the syntax. While this does not fix the external dependencies, it already provides better integration with the GitLab project.

Use Auto DevOps

It may be possible to use Auto DevOps to build, test and deploy your applications without requiring any special configuration. One of the more involved tasks of Jenkins migration can be converting pipelines from Groovy to YAML; however, Auto DevOps provides predefined CI/CD configurations that create a suitable default pipeline in many cases. Auto DevOps offers other features such as security, performance and code quality testing. Finally, you can easily change the templates if you need further customisation.

Best Practices

Start small!

The Getting Started steps above allow you to make incremental changes. This way you can make continuous progress in your migration project.

Use the tools effectively!

Docker and Auto DevOps provide you with tools that simplify the transition.

Communicate transparently and clearly!

Keep the team informed about the migration process and share the progress of the project. Also aim for clear job names and design your configuration in such a way that it gives the best possible overview. If necessary, write comments for variables and code that is difficult to understand.

Get in touch

I will be happy to answer your questions and create a customised offer for the migration of your Jenkins pipeline to GitLab CI/CD.

I will also be happy to call you!

Are Jupyter notebooks ready for production?

In recent years, there has been a rapid increase in the use of Jupyter notebooks, s.a. Octoverse: Growth of Jupyter notebooks, 2016-2019. This is a Mathematica- inspired application that combines text, visualisation, and code in one document. Jupyter notebooks are widely used by our customers for prototyping, research analysis and machine learning. However, we have also seen that the growing popularity has also helped Jupyter notebooks be used in other areas of data analysis, and additional tools have been used to run extensive calculations with them.

However, Jupyter notebooks tend to be inappropriate for creating scalable, maintainable, and long-lasting production code. Although notebooks can be meaningfully versioned with a few tricks, automated tests can also run, but in complex projects, mixing code, comments and tests becomes an obstacle: Jupyter notebooks can not be sufficiently modularized. Although notebooks can be imported as modules, these options are extremely limited: the notebooks must first be fully loaded into memory and a new module must be created before each cell can run in it.

As a result, it came to the first notebook war, which was essentially a conflict between data scientists and software engineers.

How To Bridge The Gap?

Notebooks are rapidly gaining popularity among data scientists and becoming the de facto standard for rapid prototyping and exploratory analysis. Above all, however, Netflix has created an extensive ecosystem of additional tools and services, such as Genie and Metacat. These tools simplify complexity and support a broader audience of analysts, scientists and especially computer scientists. In general, each of these roles depends on different tools and languages. Superficially, the workflows seem different, if not complementary. However, at a more abstract level, these workflows have several overlapping tasks:

data exploration occurs early in a project

This may include displaying sample data, statistical profiling, and data visualization

- Data preparation

iterative task

may include cleanup, standardising, transforming, denormalising, and aggregating data

- Data validation

recurring task

may include displaying sample data, performing statistical profiling and aggregated analysis queries, and visualising data

- Product creation

occurs late in a project

This may include providing code for production, training models, and scheduling workflows

A JupyterHub can already do a good job here to make these tasks as simple and manageable as possible. It is scalable and significantly reduces the number of tools.

To understand why Jupyter notebooks are so compelling for us, we highlight their core functionalities:

- A messaging protocol for checking and executing language-independent code

- An editable file format for writing and capturing code, code output, and markdown notes

- A web-based user interface for interactive writing and code execution and data visualisation

Use Cases

Of our many applications, notebooks are today most commonly used for data access, parameterization, and workflow planning.

Data access

First we introduced notebooks to support data science workflows. As acceptance grew, we saw an opportunity to leverage the versatility and architecture of Jupyter notebooks for general data access. Mid-2018, we started to expand our notebooks from a niche product to a universal data platform.

From the user’s point of view, notebooks provide a convenient interface for iteratively executing code, searching and visualizing data – all on a single development platform. Because of this combination of versatility, performance, and ease of use, we have seen rapid adoption across many user groups of the platform.

Parameterization

Along with increasing acceptance, we have introduced additional features for other use cases. From this work notebooks became simply paramatable. This provided our users with a simple mechanism to define notebooks as reusable templates.

Workflow planning

As a further area of notebook applications, we have discovered the planning of workflows. They have the following advantages, among others:

- On the one hand, notebooks allow interactive work and rapid prototyping and on the other hand they can be put into production almost without any problems. For this the notebooks are modularized and marked as trustworthy.

- Another advantage of notebooks are the different kernels, so that users can choose the right execution environment.

- In addition, errors in notebooks are easier to understand because they are assigned to specific cells and the outputs can be stored.

Logging

In order to be able to use notebooks not only for rapid prototyping but also for long-term productivity, certain process events must be logged so that, for example, errors can be diagnosed more easily and the entire process can be monitored. IPython Notebboks can use the logging module of the standard Python library or loguru, see also Jupyter-Tutorial: Logging.

Testing

There have been a number of approaches to automate the testing of notebooks, such as nbval, but with ipytest writing notebook tests became much easier, see also Jupyter Tutorial: ipytest.

Summary

Over the last few years, we have been promoting close collaboration between Software Engineers and data scientists to achieve scalable, maintainable and production-ready code. Together, we have found solutions that can provide production-ready models for machine learning projects as well.

Technical debts

We usually develop the functionality requested by our customers in short cycles. Most of the time, we clean up our code even after several cycles when the requirements hardly change. Sometimes, however, code that has been developed quickly must also be put into production. Technical debt is a wonderful metaphor in this regard, introduced by Ward Cunningham to think about such problems. Similar to financial debt, technical debt can be used to bridge difficulties. And similar to financial debt, technical debt requires interest to be paid, namely in the form of extra effort to develop the software further.

Unlike financial debt, however, technical debt is very difficult to quantify. While we know that it hinders a team’s productivity in developing the software further, we lack the calculation basis on which to quantify this debt.

To describe technical debt, a distinction is usually made between deliberate and inadvertent technical debt, and between prudent or reckless debt. This results in the following quadrant:

| inadvertent | deliberate | |

| prudent | «Now we know how we should do it!» sollten!»* | «We have to deliver now and deal with the consequences » |

| reckless | «WWhat is software design?» | «We don’t have time Rfor software design!» |

Read more

- Ward Cunningham: Technical Dept

- Ward Cunningham: Complexity As Debt